Technical SEO Checklist – 20+ Proven Methods to Improve Site Visibility in Search Engines

Last Updated on April 27, 2023 by Subhash Jain

Your website may not be ranking in organic search results as you expected after launching an intensive SEO campaign. What may be the reasons? Do you need more effort for building backlinks? Are your SEO practices not up to the mark? Your SEO approach may be right but it may not result in delivering because of technical issues related to website structure and performance. Have you ever thought about technical SEO? So, the ultimate solution to get the best SEO results with optimized website parameters and practices is technical SEO.

What is Technical SEO?

It is a set of practices commenced to optimize technical parameters of the website in line with advanced Google requirements of modern search engines. Indexing, crawling, website architecture, and rendering are the core pillars of technical SEO.

Why do Business Websites Need Technical SEO?

Many times, websites having quality content struggle to get visibility in search results. Even if all of your site’s content is indexed by Google, it doesn’t mean that your site will rank as you expect. Technical SEO fulfills the #1 expectation of website owners and digital marketers to see the website ranking in top search results. Here, I compile my experience-based 20+ proven tips to help web developers, business owners, SEO masters, digital marketers, and others to improve website visibility in search engines.

Website Structure – Silo Structure

From an SEO perspective, site structure is very important. The scope of website structure optimization encompasses the way information is presented, website design, and the connectivity of pages. There are four types of traditional website structure- hierarchical structure, sequential or linear, database structure, and matrix structure. Over time, the term ‘silo website structure’ has become the #1 choice of website designers.

Silo structure is a website structure approach integrated to organize quality content on a particular topic. The grouping of related information makes it easier for visitors and search engines to find the relevant query information at ease.

Tips for website silo structure – Put the content into appropriate categories, build a linking structure into the URL, internal link the groups of related pages, and design with a flat site architecture.

Use of Breadcrumbs for Better Navigation

Breadcrumb is secondary navigation support that helps users understand their location on a website. The secondary navigation bar appears like a horizontal text link. Breadcrumb improves content accessibility.

Tips for Breadcrumbs structuring – Don’t make breadcrumb navigation large, use a full navigational path in breadcrumb structure, start from the top to the lowest, make breadcrumb titles consistent in line with page titles, etc.

Use of Favicon To Display Brand Identity In Search Snippet

Favicons strengthen brand identity and UX. Generally, Favicons, the small 16×16 pixel images, appear as a page’s search snippet in mobile/desktop search results.

Tips to design Favicons to display brand identity in search snippets – Use a square image of the logo or the first letter of the business name, free online favicon generators are available, preferably use dark mode while making a favicon.

Site Should be Crawlable & Optimize Your Crawl Budget

Crawling is the initial step before indexing. Googlebot crawls the page and sends it to an indexer for rendering. If you have added or made changes to web page/s, request Google to re-index your web pages.

Tips – Use Google’s URL Inspection tool to request for crawling particular URLs. Optimizing the crawl budget is the practice of keeping the number of crawled and indexed pages the same for a timeframe.

Tips – improve the web loading speed, inter-link pages strategically, and Avoid “Orphan Pages”….

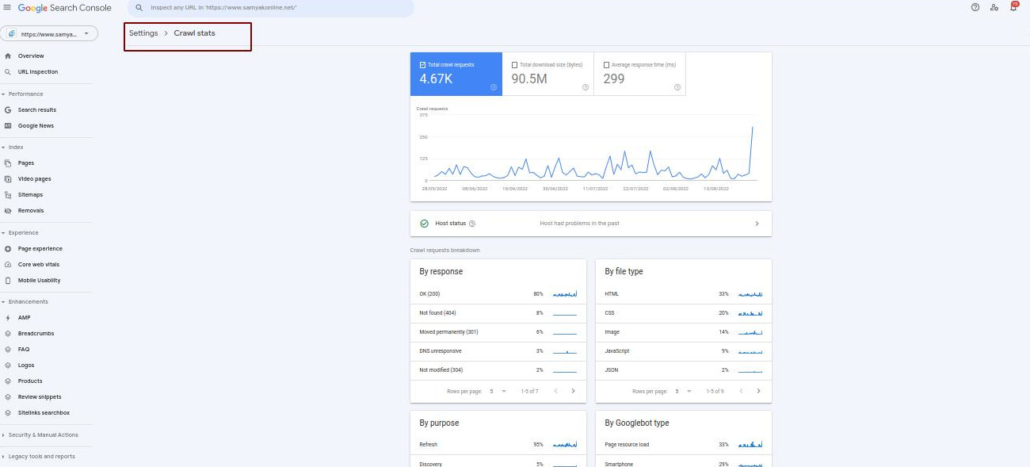

You may see a detailed “Crawl Stats” report in GSC by navigating to Settings > Crawl Stats:-

The Site Should Be Indexable

Making the website indexable means your webpage must have a high potential to be crawled and indexed. High Indexability means high chances for search indexing of web pages.

Tips – Optimize Interlinking scheme, fix pages with noindex tags, track crawl status, and deep link the isolated web pages, submit xml version of sitemap into Google search console.

Ensure Proper Rendering of Web pages

It is the process that occurs between the browser and a web page before it appears in search results. High rendering means a high Core Web Vitals score. Google doesn’t index any page that it can’t render.

Tips – Create valid HTML and CSS, use simple CSS selectors, animate elements at an absolute or fixed position, and optimize jQuery selectors….

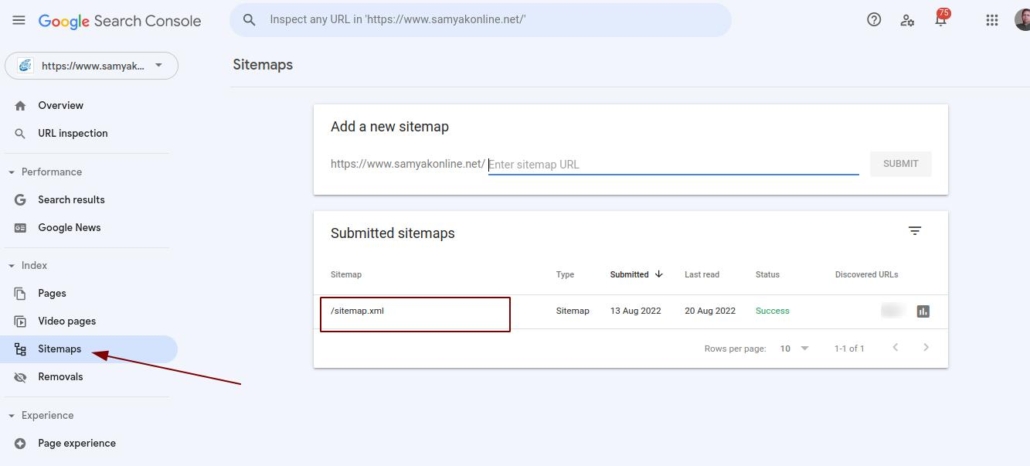

Create and Optimize Your XML Sitemap and Submit on GSC

An XML sitemap file is a list of web URLs provided for search engines to be indexed. XML sitemaps provide additional information about URLs for crawlers. A properly structured and submitted XML sitemap helps search engines to crawl a website efficiently.

Submitting an XML Sitemap on GSC (Google Search Console) is a four steps process-

- Generate the sitemap file and upload it at root of website

- Log in to Search Console account and click at Crawl; now click Sitemaps

- Click at Add/Test Sitemap button visible at the top right corner

- Enter the sitemap URL, and, click ‘Submit’

Now the next task is to optimize XML sitemap; here are four tips to get the best from XML sitemap submission-

- Use automation tools & plugins like Google XML Sitemaps

- prioritize high-quality pages in sitemap

- use only Canonical versions of URLs

- prefer to use Robots Meta tags instead of Robots.txt

In GSC, you may submit XML sitemap by navigating to Index > Sitemaps

Install an SSL Certificate for Your Website & Use HTTPs for security

SSL certificates (HTTPS encryptions) are used as the ranking signal. The websites supported with SSL certificates are trusted more by users and search engines.

Tips – There are three types of SSL certificates- Domain Validation, Organization Validation, and Extended Validation; repute of Certificate Authority (CA) matters…

Set Preferred Version of Domain

All the website versions should point towards the preferred version of the website. Setting a preferred domain (www or non www domain) allows Google to know which domain it should crawl and index.

Tips – Use 301 Redirect; At webmaster tools, click “Settings” below the “Configuration”, now check for the “Preferred Domain” and select the preferred domain.

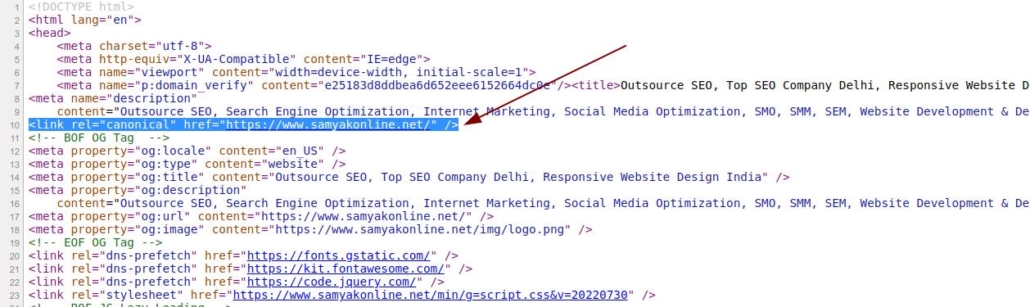

Use of Canonical Tags to Point Main URL of A Webpage

The use of the canonical tag allows Google to know about the main page if there exist many pages having almost the same content. The “canonical URL” specifies the “preferred” web page version.

Tips – Make sure that canonical URL is accessible, always use full URL while adding an URL to canonical tag, you can use canonicalize facility across domains.

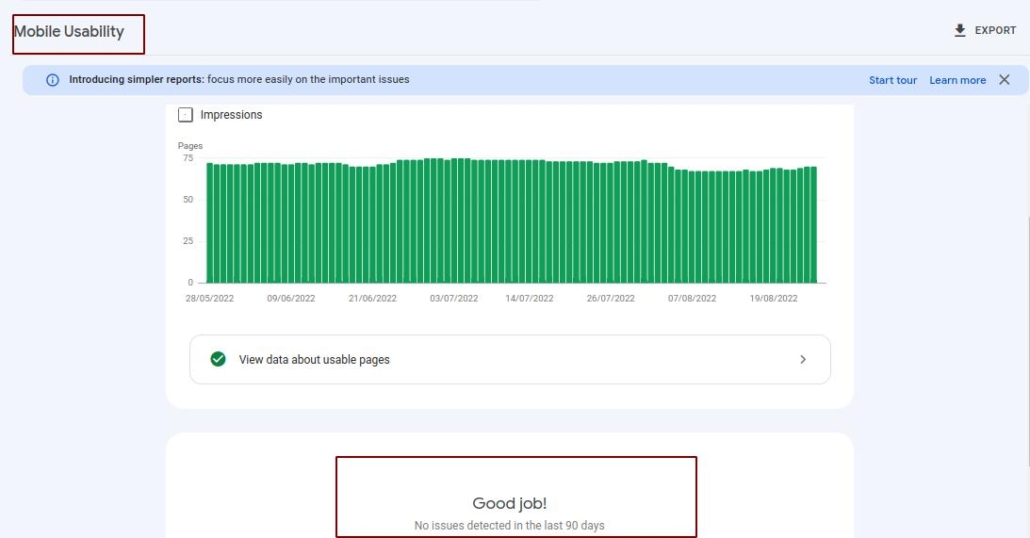

Make Sure That Website Is Mobile Friendly

Google Search Console’s mobile usability report tells you about the issues that make it hard to use for mobile users. If Google finds any webpage not responsive to mobile searches, it tells all about it. To check ‘if your web page is mobile-friendly?’, you can conduct a ‘mobile friendly test’.

Tips – Embed viewport meta tag; use structured data; enable JavaScript, CSS, or image file….

Website Should Load Faster

There are a number of factors that influence the website speed; a website speed audit takes care of all those technicalities. Here I share some valuable tips for website speed audit and optimization.

Core Web Vitals Compliance – Core Web Vitals, the important ranking factors, are the technical parameters that Google evaluates to consider the UX quality. Core Web Vitals assess a website’ performance on the basis of three page speed and user interaction parameters: speed, responsiveness, and visual stability.

The most important Web Vitals metrics are –

- Largest Contentful Paint (LCP): 2.5 seconds or less

- First Input Delay (FID): 100 milliseconds or less

- Cumulative Layout Shift (CLS): 0.1. or less

(The Chrome User Experience Report gives a true picture of website performance for each Core Web Vital.)

Reduce Server Response Time: The standard for server response time is under 200ms. Dozens of dozens of potential factors increase the response time. So, how can you reduce server response time to improve UX and SEO results?

Tips – Use fast web hosting service; use content delivery network (CDN) framework; optimize database; minify scripts….

Test Load Time with and without CDN – CDN is trusted to make the website appear faster for greater UX. Google offers an open-source page speed measuring tool ‘PageSpeed Insights’; it provides suggestions to improve page speed.

When you test load time with CDN, consider these tips – Test for Geo-location, test for specific-content type, audit network analysis, and SLA requirements.

Fix “Too Many Redirects” & Eliminate Redirect Loops –

‘ERR_TOO_MANY_REDIRECTS’ is a commonly noticed issue that affects UX, brand’s reputation, and ranking. This error appears when too many redirects send the browser into an infinite redirection loop; in this case, the browser doesn’t assess which URL to be used.

Tips to eliminate Redirect Loops: Reset htaccess file, Evaluate third-party plugins, audit SSL certificate, clear browser cache and redirect website cookies…

Identify & Minimize your render-blocking resources like CSS, JS, and Images: The major Render-blocking resources are stylesheets, HTML imports, scripts, etc. These resources increase First Input Delay. The tools like Lighthouse, PageSpeed Insights, and GTmetrix help you identify render-blocking resources.

My tips to minimize render-blocking resources include:-

- Don’t add CSS with @import rule

- Use media attribute for conditional CSS

- Split CSS into critical & non-critical parts

- Load conditional CSS with media attributes

- Minimize CSS and JavaScript files & remove unused CSS and JavaScript

- Set a Browser Cache Policy

- Limit the Page size under 15 MB

- Minify HTML Code and Main thread work

- Limit the HTTP Requests

Image Optimization

Well optimized unique and quality images are a valuable asset for a website to rank well. Audit of image quality is a must to rank your website above your competitors’ websites. What should you do for image optimization?

My tips for website image audit will help you improve SEO gains –

- Use proper image formats (JPEG 2000, JPEG XR, JPG, PNG, WEBPP)

- Clarify image dimensions (Crawler takes less time to assess the image measurement)

- Compress images (Use graphic editor tools; compressed images make the website load faster)

- Lazy load offscreen images (Some images don’t load until they are needed)

- Use responsive Images that adapt to different device sizes

Identify and Fix Broken Links 404

The broken links derail your plan of offering an easy-to-access valuable informative asset to your target audience. The bad user experience and poor SEO benefits are the common outcomes of unidentified and unattended broken links. The ‘Behavior tab’ in Google Analytics is a great free tool to find the broken links for a particular period. The “advanced” window shows ‘404 Page’. The Broken Links 404 report can be exported to a spreadsheet. Here, I share three tips to fix Broken Links 404:-

- Update the link with correction

- Redirect the link to a new page

- Remove the broken links

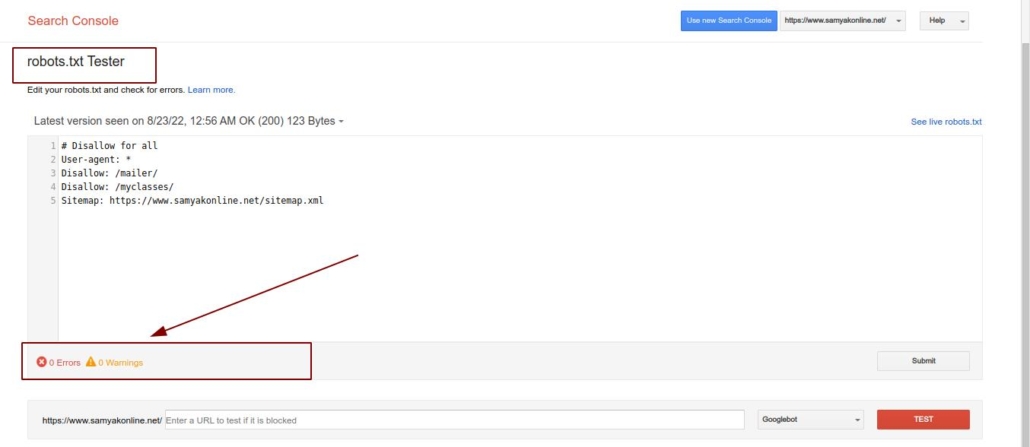

Optimize Robots.txt File

The webmasters create Robots.txt file to instruct web search engine robots the way to crawl web pages. Google usually finds and indexes all the important pages of a website.

Then, why do you need to use a robots.txt file?

- You might have some pages that you don’t want to be indexed

- It helps to maximize the crawl budget

- Robots.txt file may direct search engines not to crawl multimedia resources for faster speed

My tips to use Robots.txt file with the best practices – To update the indexing rules in Robots.txt file, you can download robots.txt file from robots.txt Tester in GSC (Google Search Console).

Alternatively, you can view robots.txt in a browser by adding /robots.txt at the end of the sub-domain.

The robots.txt feature of Screaming Frog SEO Spider also allows you to check and validate a robots.txt file.

Another tool for auditing and updating Robots.txt file that I liked is SEMrush Site Audit tool that explores the entire domain to find a robots.txt; its unique feature informs you if the robots.txt file is not directed to sitemap.xml file.

Tips to optimize Robots.txt file – Make it easy to find, set Robots.txt file correct, place Robots.txt file at the root of the site/website, name the Robots.txt file only in lower case, use only one Robots.txt file for a website….

You may use Google Robots.txt Tester to verify the correctness and accuracy of your robots.txt file. The tool show errors/warnings if any:-

Identify & Fix 301 Permanent Redirects

A 301 redirect error denotes the shift of a web page from one location to another. The 301, an HTTP status code, is sent to a browser by a web server. All users requesting an old URL are redirected to a new URL.

When should you use 301 Permanent Redirect?

You can use 301 Permanent Redirect if you are shifting a webpage permanently to a new URL or migrating the website to a new domain or shifting from HTTP to HTTPS or merging two or more domains or resolving lower-case vs. upper-case’ issues or resolving ‘trailing slash’ issues.

Why should you fix 301 Permanent Redirects?

If pages with 301 status codes exist on sitemap, it is simply a waste of crawl budget because Google will visit these abandoned URLs each time it re-crawls your website. Permanent Redirects appear in search results.

How can you identify 301 Permanent Redirects?

You can identify 301 Permanent Redirects by many tools like SEMRush etc.

How can you fix 301 Permanent Redirects?

The most common way to fix 301 Permanent Redirects is through a .htaccess file.

Identify & Fix 302 Temporary Redirects

A redirect 302 code tells visitors that the specific page has been relocated temporarily to another URL. A 302 redirect neither passes the “link juice” nor keeps domain authority at the new location. In general, the browsers automatically detect 302 response codes and redirect requests to a new location.

How can you check 302 Temporary Redirects?

I will recommend SiteAnalyzer, RankWatch, and Geekflare tools to find 302 Temporary Redirects.

How can you fix a 302 redirect?

Here are a few tips to fix 302 Temporary Redirects: Determine the validity of redirects, check for plugins, ensure that URL configuration is right, check the server configuration, and call a web host services provider.

Strengthen UX Signals

Finding the silos and correcting those silos in UX is also a part of technical SEO.

Technical SEO scales all the important UX KPIs like task success rate, user error rate, time on task, System Usability Scale (SUS), Customer Satisfaction Score (CSAT), and Net Promoter Score (NPS), etc to ensure the engaging UX.

After the release of RankBrain, Google started incorporating “User Experience Signals” into the search results algorithm.

The important UX signals are:-

Dwell Time

It is the amount of time that a user spends on a webpage before returning back to the SERPs. Some signs confirm that Google uses Dwell Time as a website ranking signal. Tips to increase Dwell Time: Embed videos, use PPT, put longer content with small chunks, Maximize PageSpeed….

Pogo Sticking

It is the users’ practice to visit different search results to find the best resource the most relevant to their search query. Google introduced the “People also search for” box to reduce Pogo Sticking to improve UX.

Tips to stop people from Pogo sticking after visiting your site – Add internal links above the fold, use big font size (15px -17px font), use a table of contents, refresh the content for relevancy and wider scope,….

Bounce Rate

Bounce rate is the percentage of visitors who leave a webpage without taking any action. Bounce Rate is closely correlated with first-page search results. The bounce rate may not be a ranking factor but indirectly it affects a website’s ranking.

Tips to improve bounce rate thus the website ranking – Embed YouTube videos, improve loading speed, use PPT template, use easy-to-read content satisfying search intent, design the best….

Organic CTR

Organic Click-Through-Rate is the percentage of visitors who click search engine results. Although Organic CTR depends on ranking position, it is influenced also by a title tag, URL, Rich Snippets, description, etc.

Google has confirmed that it uses CTR in its algorithm.

Tips to improve Organic CTR – Use a number and brackets in the title tag, include numbers in the headline, use year in title and description, optimize meta description,…..

Search Intent

It is the objective of the user to initiate a query. Search Intent may be informational, navigational, transactional, or commercial. If a page doesn’t satisfy users’ search intent with high-quality content, it wouldn’t rank.

My tips will help you to satisfy users’ search intent – Know search intent, optimize UX, update existing content, optimize commercial pages, use keywords with multiple intents, ….

Accelerated Mobile Pages (AMP)

Accelerated Mobile Pages (AMP) is an open-source framework that is developed by Google in collaboration with Twitter to deliver the ultimate experience to mobile users with mobile websites.

Google has started to integrate AMP results into mobile search results since February 24, 2016.

Competitive website speed, higher CTR, and better discoverability are the key benefits of having optimized AMP pages. Here are 3 tips to help you optimize AMP performance for your website – use content delivery network, use a reliable server, use AMP performance optimizers, …..

Href lang Attribute for International Website for Language & Geo-Targeting

The hreflang attribute tells Google about the specific language of a particular webpage, so that Google could serve that page with a priority to the users searching that particular information in that language.

Href lang attribute becomes important for the websites designed to serve the international community using different languages for a query. It is highly beneficial for geo targeting. Use of Href lang attribute improves UX for a particular user’s segment.

Tips to optimize Href lang attribute usage – Place Href lang attribute on the on-page markup, the sitemap, or the HTTP header; use ISO 639-1 format for language codes, use the multiple hreflang for a wider international readership of content,……

Use of Schema for Rich Snippets

Schema is a Semantic Vocabulary used by search engines. It describes the type of page content like product, review, job posting, recipe, business listing, etc.

The structured data presented through Schema makes web pages more readable for search engines; therefore, your webpage appears in rich results. Tips – Use commonly used Schemas, use all Schemas that you need, use the tools like Google Data Highlighter, Google Structured Data Markup Helper, and Google Rich Results Tester.

Identify and Remove Duplicate Content

The presence of duplicate content harms SEO results considerably in many ways like lower search results ranking, poor UX, and decreased organic traffic.

Google Search Console is a powerful free tool to help you identify the URLs creating duplicate content issues like HTTPS and HTTP versions of the same URL, URLs with & without “/”, URLs with & without capitalizations, etc.

Tips to remove duplicate content – integrate rel = canonical attribute, use 301 Redirect, use Robots Meta Noindex, ……

Semantic SEO

Semantic SEO is the optimization of a website to answer all the possible questions that a user may intend to ask related to the same topic in a hierarchical structure.

The content is developed for search engines around a topic not just for the keywords. After Hummingbird Algorithm, the Google bot understands and evaluates a page for overall topic relevance and value for the user.

The key benefits of in-depth & information-rich content-oriented Semantic SEO include improved UX, improved ranking, increasing internal linking scope, and increasing CTR.

Follow my tips to get the best results from Semantic SEO – Understand user intent, use common search terms & long tail keywords, make the content Research relevant, use schema markup & metadata.

FAQs

Google doesn’t crawl password-protected web pages. Here, I will recommend creating teaser pages for your password-protected web pages. On a teaser page, include a title and brief description of the content to be accessed behind a password. Teaser content is accessible and the limited version of the password-protected page is indexed.

Yes, use of iframes in website design makes it tough for search engines to index your website. A typical iframed web page comprises at least three files while a non-framed web page comprises one file; it simplifies indexing non-framed web pages by search engines.

Yes, it is possible but challenging. The success and period to get the #1 slot in search results depend upon several factors like niche, competition, website parameters, promotional strategy, etc. Yes, paid SEO campaigns for search engines expedites the outcomes by increasing traffic, visibility, and improving brand awareness quickly.

According to Google, domain age doesn’t affect websites because it is not a ranking factor. However, domain age has an influence on domain authority.

You can use Google’s indexing API for instant crawling of a website’s pages and content rather than to wait. The Indexing API allows web owners to notify Google about the addition or deletion of pages.

Technical SEO is the optimization practice of “back end” parameters of a website so that search engines could crawl, index, and rank the website better. On-page SEO is the optimization practice of optimizing web pages. Technical SEO addresses all the technical issues that may harm the website’s accessibility and ranking by search engines. On-page SEO addresses all the issues that may harm traffic.

The SSL certificate, a digital certificate, authenticates a website’s identity. SSL stands for Secure Sockets Layer that enables an encrypted connection. It is a security protocol that creates an encrypted link between a web browser and a web server. Websites need an SSL Certificate to secure online transactions and customers’ information; it strengthens the trust of users.

About Author:

Subhash Jain is the Founder of Samyak Online. Contact us now if you willing to go for a Technical SEO of your website to increase Visibility of your website in Google.

Leave a Reply

Want to join the discussion?Feel free to contribute!